Regularizer Machine and Deep Learning: The Complete Guide 2025

Deep learning has become one of the most transformative fields in technology, driving innovations in image recognition, natural language processing, and even medical diagnostics. But have you ever wondered what keeps these powerful models accurate and prevents them from becoming overly complex? That’s where regularization comes in. Regularizer Machine and Deep Learning techniques are like guardrails, ensuring that models don’t “over learn” from their data or get misled by noise in the information. In this guide, we’ll explore regularizers, why they matter, and how they help keep deep learning models effective and reliable.

Introduction to Regularization

At its core, regularization is a way to keep machine learning models on track. Regularizer Machine and Deep Learning, where models can become exceedingly complex, regularization serves as a guiding force, preventing them from getting too tangled up in the fine details of the data. Imagine you’re studying for an exam: if you memorize every single example without understanding the concepts, you might do well on practice tests but struggle with real-world questions. This is similar to what happens when a model “overfits.” Regularization methods help models focus on the main ideas without getting bogged down by irrelevant details, leading to a balanced and adaptable model that performs well on new data.

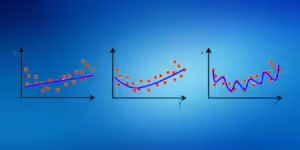

Understanding Overfitting and Underfitting

Regularization is essential because it addresses two common issues in machine learning: overfitting and underfitting. Let’s take a closer look.

Overfitting

Overfitting happens when a model learns the training data too well, capturing all the noise and specific patterns rather than general trends. This results in a model that performs well on training data but poorly on new data because it has become too specialized.

Underfitting

On the other end of the spectrum is underfitting. When a model is too basic to identify the underlying patterns in the data, this happens. It’s like trying to predict weather patterns using only the temperature from one day.

Regularization helps prevent overfitting by adding a slight penalty for complexity, encouraging the model to focus on broader trends.

What is a Regularizer Machine?

A Regularizer Machine and Deep Learning isn’t actually a machine but rather a concept that refers to various techniques and methods applied to machine learning models to enhance their accuracy and generalization. Think of it as a toolkit filled with techniques designed to help models avoid overfitting and underfitting. The term “regularizer machine” is simply a way of describing the broad set of tools available for controlling model complexity and improving reliability.

Types of Regularization Techniques

There are several regularization methods available, each suited to different needs.

- L1 and L2 Regularization: Techniques that penalize weights in the model to prevent reliance on any single feature.

- Dropout: A process of randomly turning off neurons in a neural network to prevent overreliance on specific patterns.

- Batch Normalization: Standardizes data at each layer of the network to stabilize and speed up training.

- Data Augmentation: Expands the dataset by creating modified versions of data samples to increase robustness.

L1 and L2 Regularization

L1 Regularization (Lasso)

Lasso regularization, another name for L1 regularization, imposes a penalty according to the absolute value of each model weight. This has the effect of driving some weights to zero, effectively removing less important features. It’s like pruning a tree—keeping only the branches (features) that are essential Regularizer Machine and Deep Learning.

L2 Regularization (Ridge)

L2 regularization, or Ridge regularization, applies a penalty based on the square of each weight. This method doesn’t reduce weights to zero but makes smaller weights even smaller, reducing their impact without removing them entirely. L2 regularization is useful for models where all features are likely to carry some significance but shouldn’t dominate the model.

Dropout Regularization

Dropout is a unique approach in deep learning where certain neurons are “dropped” or turned off during each training iteration. It’s like a team of workers taking turns so that each one gets a chance to contribute without any single worker becoming too critical. This forces the model to rely on multiple pathways to learn patterns, making it less likely to become overly attached to specific data features Regularizer Machine and Deep Learning.

Batch Normalization

Batch normalization helps maintain stability and accelerates training by normalizing data inputs within a neural network layer. By standardizing these inputs, batch normalization reduces fluctuations and maintains consistent training progress. This technique is especially helpful in deep networks, where small inconsistencies can have a large impact over many layers.

Data Augmentation

In scenarios where there isn’t enough training data, data augmentation can be a lifesaver. By creating slight variations of the original data (such as rotating, zooming, or cropping images), data augmentation effectively expands the training set. This technique enables models to recognize patterns in a more diverse range of scenarios, reducing their dependence on specific instances and improving generalization.

Regularization and Neural Networks

Regularization is crucial in neural networks, where numerous layers can lead to complex, interconnected patterns. In these deep networks, the risk of overfitting increases as the model may try to “memorize” rather than “learn.” Regularizer Machine and Deep Learning techniques help these layers work together to generalize their learning, making the model resilient across different datasets and applications.

Advantages of Using Regularizers

Regularizers offer several benefits that contribute to the efficiency and accuracy of deep learning models:

- Enhanced Accuracy: Regularizers help models generalize better, resulting in higher accuracy on unseen data.

- Model Simplicity: Techniques like L1 regularization help remove unnecessary features, simplifying the model.

- Reduced Complexity: Regularizers control the model’s size and complexity, making deployment easier and more efficient.

Each advantage plays a significant role in making machine learning models more robust and reliable in real-world applications.

Regularization Challenges

While Regularizer Machine and Deep Learning brings many benefits, it also has its own set of challenges. Applying too much regularization can lead to underfitting, where the model becomes too simple and fails to learn meaningful patterns. Moreover, finding the right balance requires experimentation and fine-tuning, as each dataset and model structure responds differently to various regularization techniques.

Future of Regularizer Machines in Deep Learning

The role of regularizers is expected to expand as deep learning continues to evolve. With the growing complexity of AI models, regularization will be essential for ensuring stability and scalability. Researchers are likely to develop new methods that will help models handle vast, complex datasets in diverse applications. The future of regularization will likely focus on adaptive techniques that can dynamically adjust to different Regularizer Machine and Deep Learning requirements, making AI more accessible and practical for a wide range of industries.

Conclusion

Regularization is a cornerstone of modern Regularizer Machine and Deep Learning, ensuring that models remain accurate, reliable, and efficient. By preventing overfitting and simplifying complex models, regularization enhances the performance of machine learning applications across many domains. Whether it’s through L1 and L2 penalties, dropout, batch normalization, or data augmentation, regularization techniques provide the necessary guidance for building models that work well in the real world.

FAQs

What is a Regularizer Machine and Deep Learning?

A regularizer is a technique applied to machine learning models to prevent overfitting by limiting the model’s complexity, leading to better generalization.

Why is dropout regularization effective?

Dropout regularization reduces overfitting by temporarily removing random neurons during training, which forces the model to learn from a variety of pathways rather than relying on specific neurons.

What’s the difference between L1 and L2 regularization?

L1 regularization penalizes a model based on the absolute values of the weights, leading to some weights being zeroed out. L2 regularization uses squared weight values, causing weights to shrink but not reach zero, which maintains a balance among features.

How does regularization affect model training?

Regularization can slightly slow down training, as it adds a penalty term to the loss function, but it generally results in more accurate, generalized models that perform well on new data.

Is regularization always necessary in deep learning?

Not always, but it’s highly beneficial in complex models where the risk of overfitting is higher. Regularization helps in balancing accuracy and generalization, which is often critical for real-world applications.

Read more: Online Visual Studio Editor